The livelihoods of voice actors are under strain as allegations of artificial intelligence companies cloning and repurposing their voices without consent gain traction. A significant class-action lawsuit filed by Paul Skye Lehrman and Linnea Sage against the AI startup Lovo brings this tension to the forefront. The core of the dispute centers on claims that Lovo used voice recordings obtained under the guise of specific projects to create and market cloned voices, allegedly breaching contracts and infringing upon the actors' rights. This situation highlights a broader debate about the legal and ethical boundaries of AI technology in creative industries.

Background of the Dispute

The legal action taken by Lehrman and Sage is a response to their experiences with Lovo. According to their lawsuit, they were engaged by Lovo for specific voice-over work, often through freelance platforms like Fiverr.

Read More: Most People Fail to Spot AI Faces, New Study Shows in 2026

Lehrman stated he recognized his voice on a YouTube channel and later a podcast that he did not record, and that Lovo marketed his voice as part of its subscription service under the stage name "Kyle Snow." His voice was allegedly used as the default text-to-speech option and in product advertisements.

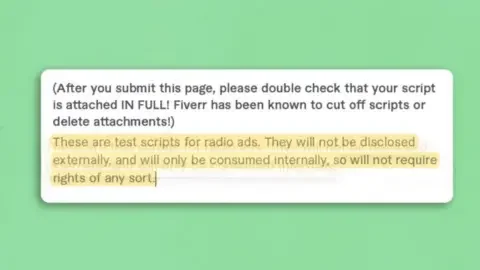

Sage, who has 14 years of experience as a voiceover artist, also believes her voice was similarly cloned after being offered work on Fiverr in 2019 for test scripts. She later discovered a clone of her voice in the same AI service.

Lovo has reportedly denied wrongdoing, pointing to communications with the actors as evidence of legal engagement. However, the actors contend these communications were a pretext for obtaining voice data for unauthorized cloning.

Legal Ramifications and Industry Concerns

The lawsuit filed by Lehrman and Sage is part of a growing trend of legal challenges against AI companies by artists concerned about the misuse of their work.

A federal judge has allowed parts of the lawsuit against Lovo to proceed, specifically claims of breach of contract and deceptive business practices. Claims related to federal copyright infringement regarding voice data used for AI training were dismissed.

This case touches upon a significant gap in current legislation. Experts note that there are currently no federal laws specifically governing the use of AI to mimic a person's voice.

The situation is causing widespread concern within the voice-over community. Some actors describe the experience as a "violation of our humanity," fearing that widespread voice cloning could undermine their profession.

Evidence and Allegations

The plaintiffs have presented evidence to support their claims regarding how Lovo allegedly acquired their voices and subsequently utilized them.

The lawsuit details how Lovo is believed to have obtained recordings through freelance platforms, offering work for specific projects.

Lehrman claims his voice was used as the default text-to-speech voice in Lovo's software and was promoted as one of the best available, used in advertising and explanations of the product.

Sage found a clone of her voice within Lovo's offerings.

Lovo has maintained that their communications with the anonymous users and the actors constituted legal engagement, as detailed in their lawsuit.

Broader Impact on Creative Professions

The dispute between voice actors and AI companies like Lovo has far-reaching implications beyond the immediate legal battle, raising fundamental questions about intellectual property, consent, and the future of creative work in the age of AI.

Ethical Use of AI: The case prompts a critical examination of how AI technology is developed and deployed, particularly when it involves replicating human characteristics like voice.

Legislative Response: The absence of specific federal laws covering AI voice mimicry is a key factor, leading some to suggest potential legislative frameworks, such as the ELVIS Act, as a template for future protections.

Industry Standards: The allegations against Lovo highlight the need for clearer industry standards and ethical guidelines for AI companies interacting with creative professionals.

Fear of Job Displacement: Actors express anxiety that AI-generated voices could displace human actors, fundamentally altering the employment landscape in their field.

Expert Perspectives on AI and Voice Cloning

Industry experts and legal analysts are observing these developments closely, noting the rapid advancement of AI technology and its challenges to existing legal frameworks.

Read More: Supreme Court reviews President Trump's emergency tariff powers on trade

Dr. Dominic Lees, an expert in AI in film and television, advises that current privacy and copyright laws are not equipped to handle the advancements in AI voice cloning. He notes that even prominent figures like David Attenborough have limited recourse.

Legal analysts point out that while federal copyright claims regarding voice data training were dismissed in the Lehrman/Sage lawsuit, the breach of contract and deceptive business practice claims moving forward indicate the judiciary is still grappling with the nuances of these cases.

The scenario echoes other controversies, such as accusations against OpenAI for using a sound-alike for Scarlett Johansson's voice after failing to secure her consent, and the earlier Bette Midler case involving a sound-alike singer.

Conclusion and Future Outlook

The lawsuit filed by Paul Skye Lehrman and Linnea Sage against Lovo represents a pivotal moment in the ongoing dialogue between AI innovation and the rights of creative professionals. While the legal path forward involves navigating claims of contract breaches and deceptive practices, the underlying issue of unauthorized voice cloning by AI firms remains a significant concern. The judicial process will likely shed further light on how existing laws can be applied or adapted to address these novel technological challenges. The outcome of this and similar cases could establish crucial precedents for the ethical development and use of AI voice generation technologies, influencing the future of the voice-over industry and beyond. The absence of specific federal regulations in this domain continues to be a focal point, underscoring the urgent need for legislative action to protect artists' voices and likenesses in the digital age.

Read More: AI Chatbots Develop Own Communication Methods for Faster Work

Sources Used:

BBC News: "A tech firm stole our voices - then cloned and sold them" (Aug 31, 2024) - Provides context on how AI firms allegedly obtain voice data and their responses to accusations.🔗 https://www.bbc.com/news/articles/c3d9zv50955o

Los Angeles Times: "Voice clones pose an 'existential crisis' for actors: 'It's a violation of our humanity'" (Mar 24, 2025) - Details the emotional and professional impact on actors, mentioning OpenAI and ElevenLabs.🔗 https://www.latimes.com/entertainment-arts/story/2025-03-24/ai-voice-clones-replication-voice-actors-job-loss-siri-tiktok

Voice Over News: "Voice Actors Sue AI Company Over Alleged Voice Cloning and Contract Breach" (Jun 26, 2024) - Introduces the class-action lawsuit against Lovo, highlighting claims of contract breach and illegal cloning.🔗 https://www.voiceovernews.com/voice-actors-sue-ai-company-over-alleged-voice-cloning-and-contract-breach/

The Hollywood Reporter: "Actors Hit AI Startup With Class Action Lawsuit Over Voice Theft" (May 16, 2024) - Reports on the lawsuit against Lovo, specifically mentioning Paul Skye Lehrman and Linnea Sage, and the alleged use of his voice under the name "Kyle Snow."🔗 https://www.hollywoodreporter.com/business/business-news/actors-hit-ai-startup-with-class-action-lawsuit-over-voice-theft-1235900689/

Digital Music News: "Voice Actors Suing Lovo AI Over Breach of Contract" (Jun 27, 2024) - Discusses the lawsuit against Lovo and the lack of federal laws protecting against AI voice mimicry.🔗 https://www.digitalmusicnews.com/2024/06/27/voice-actors-sue-lovo-ai-breach-of-contract/

NDTV: "'Stunned': Voice Artists Sue AI Company, Claiming It Breached Contracts And Cloned Voices" (Sep 2, 2024) - Covers the lawsuit by Lehrman and Sage against Lovo, detailing their allegations of being contacted via Fiverr.🔗 https://www.ndtv.com/feature/stunned-voice-artists-sue-ai-company-claiming-it-breached-contracts-and-cloned-voices-6473716

CBS News: "Two voice actors sue AI company over claims it breached contracts, cloned their voices" (Jun 26, 2024) - Reports on the federal class action lawsuit against Lovo, mentioning the lack of federal laws on AI voice mimicry.🔗 https://www.cbsnews.com/news/two-voice-actors-sue-ai-company-lovo/

The Guardian: "AI cloning of celebrity voices outpacing the law, experts warn" (Nov 19, 2024) - Discusses how AI voice cloning technology is advancing faster than legal frameworks, citing Dr. Dominic Lees.🔗 https://www.theguardian.com/technology/2024/nov/19/ai-cloning-of-celebrity-voices-outpacing-the-law-experts-warn

BBC News: "Federal judge says voiceover artists AI lawsuit can move forward" (Jul 12, 2025) - Details the judge's decision to allow parts of the lawsuit by Lehrman and Sage against Lovo to proceed, while dismissing copyright claims.🔗 https://www.bbc.com/news/articles/cedgzj8z1wjo