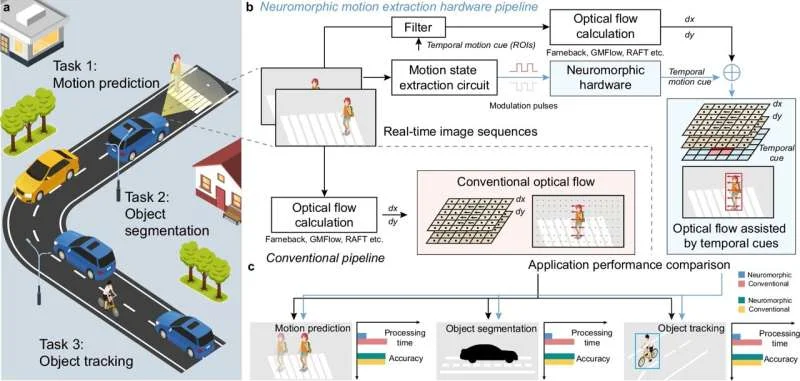

A new type of computer chip, designed to work like the human brain, is showing promise for helping robots and self-driving cars react to movement much faster. This advancement aims to solve a key problem: the delay in processing visual information, which is crucial for machines to interact safely with their surroundings. By mimicking biological processes, this technology could lead to more responsive and capable autonomous systems.

Background: The Need for Speed in Machine Vision

Current systems that allow machines to "see" often capture images as a series of still pictures. Motion is then tracked by noticing how brightness changes from one picture to the next. While this works, it can be slow, especially when there's a lot of activity.

Traditional Method: Relies on cameras capturing static frames.

Motion Tracking: Done by analyzing brightness changes between frames.

Limitation: Can suffer from delays in processing, particularly in complex environments.

Read More: Android Phones May Get New Features to Challenge iPhones

This delay is a significant hurdle for applications like self-driving cars and advanced robotics, where quick reactions to moving objects are essential for safety and effectiveness.

The Neuromorphic Approach: Learning from the Brain

Researchers are developing chips that take inspiration from how the human brain processes visual information. This field is known as neuromorphic engineering.

Inspiration Source: A part of the brain called the lateral geniculate nucleus (LGN), which acts as a filter and relay station between the eye and the main visual processing area. The LGN helps the brain focus on important or fast-moving things.

Hardware Solution: Instead of trying to improve the software in existing systems, scientists are building new hardware designed to mimic human vision.

Analog Computation: These new chips use a type of computation that is more like how brain cells work, which is generally more energy-efficient than the digital calculations in typical computers.

Read More: AI Company Anthropic Gives Money to Political Group

The core idea is to create hardware that processes visual data more directly and efficiently, reducing the time it takes to understand what's happening in the environment.

Key Developments and Materials

Several recent advancements highlight this new direction:

A Chip Mimicking the LGN

One notable development is a chip inspired by the lateral geniculate nucleus (LGN). This part of the brain helps filter and prioritize visual information.

Functionality: The chip aims to improve how robotic vision systems handle motion, similar to how the human visual system focuses processing power on fast-moving objects.

Underlying Algorithms: While the chip architecture is brain-inspired, it still uses optical-flow algorithms to interpret images.

Challenges: Such systems can still face difficulties in visually busy scenes with many movements happening at once.

Molybdenum Disulfide (MoS₂) as a Core Material

A specific material, molybdenum disulfide (MoS₂), is proving to be key in building these brain-like chips. This material is extremely thin, only a few atoms thick.

Read More: Airtel Adds Big Internet Speed to India with New Underwater Cable

Properties: MoS₂ has useful optical and electrical characteristics.

Integration: It's used in creating neuromorphic chips that can detect motion, process information, and even store memories.

Energy Efficiency: This approach bypasses the need for heavy data processing and high energy consumption typical of traditional digital systems.

MoS₂ enables a new class of devices that can process visual changes in real-time without needing a separate, powerful computer.

Performance Improvements

Tests and studies indicate significant speedups compared to current technologies.

Speed Increase: The new systems have demonstrated processing motion data four times faster than existing advanced algorithms.

Human-Level Perception: The goal is to achieve processing times within the range of human visual perception, estimated to be around 150 milliseconds.

| System Type | Processing Speed Metric | Comparison Basis |

|---|---|---|

| Conventional Robotic Vision | Varies, can be slow in complex scenes | Static frame analysis |

| Neuromorphic Temporal-Attention | ~150 milliseconds (target) | Human visual perception range |

| Brain-Inspired MoS₂ Chip (Lab) | 4x faster | State-of-the-art algorithms |

Potential Applications

The implications of faster, more efficient machine vision are far-reaching.

Read More: Elon Musk Wants to Build AI Factory on the Moon

Robotics: Enabling more agile and responsive robots that can interact with their environment in real-time.

Autonomous Vehicles: Improving the decision-making speed of self-driving cars, making them safer and more reliable.

Advanced AI: Paving the way for AI systems that are not only faster and more efficient but also more adaptable.

This technology could fundamentally change how machines perceive and interact with the world around them.

Expert Analysis

The move towards neuromorphic hardware is seen as a crucial step for artificial intelligence.

"Unlike traditional digital systems, which require heavy data processing and consume substantial energy, this neuromorphic chip operates using analog-style computation that mimics neural processing."

This suggests a shift away from power-hungry, complex computational methods towards more efficient, bio-inspired designs.

Conclusion and Next Steps

The development of brain-inspired chips marks a significant stride in machine vision technology. By mimicking the efficiency and speed of human biological systems, particularly through materials like MoS₂ and by drawing inspiration from brain structures like the LGN, researchers are creating hardware capable of much faster motion detection and processing.

Read More: Figure Skating Will Use AI for Fairer Scores

Key Finding: Neuromorphic chips can process visual motion data significantly faster than current methods, approaching human-level reaction times.

Impact: This holds the potential to revolutionize fields like robotics and autonomous driving by enabling more rapid and effective environmental interaction.

Future Work: Continued refinement of these neuromorphic materials and processing techniques is expected to unlock further capabilities and expand the potential applications of this transformative technology.

Sources Used:

Indian Express: Published ~4 minutes ago. Details a chip inspired by the LGN and its function in motion detection.https://indianexpress.com/article/technology/tech-news-technology/human-brain-inspired-chip-motion-improvement-10531829/

Tech Xplore: Published 3 days ago. Describes an international team's neuromorphic hardware system for faster automated driving decisions, referencing human visual perception speeds.https://techxplore.com/news/2026-02-bio-chip-robots-cars-react.html

Daily Galaxy: Published May 16, 2025. Reports on RMIT University's neuromorphic chip that mimics human vision, processing, and memory storage using MoS₂.https://dailygalaxy.com/2025/05/meet-the-brain-inspired-chip-that-sees-motion-and-stores-memories-faster-than-ever/

Rude Baguette: Published May 18, 2025. Features RMIT University's brain-like sensing device combining neuromorphic materials and signal processing for real-time vision.https://www.rudebaguette.com/en/2025/05/robots-can-finally-see-brain-inspired-chip-gives-humanoids-and-electric-vehicles-instant-vision-with-human-level-perception/

Science News Today: Published Aug 30, 2025. Discusses the demand for faster hardware in AI and the potential of neuromorphic computing for efficiency, mentioning MoS₂.https://www.sciencenewstoday.org/the-brain-inspired-technology-that-could-revolutionize-ai-forever