A series of AI-generated images depicting Alex Pretti, a Minnesota resident involved in an incident with ICE officers, have surfaced across various platforms. These manipulated visuals have been shared and presented in different contexts, raising concerns about misinformation. The images, which appear to show events not supported by available evidence, have been circulated on social media and even displayed in official settings, including a U.S. Senate committee hearing. The spread of these altered visuals highlights a growing challenge in distinguishing between authentic and artificially created content in public discourse.

The Spread of AI-Generated Imagery

Recent weeks have seen the proliferation of AI-generated images and videos that misrepresent events surrounding the incident involving Alex Pretti.

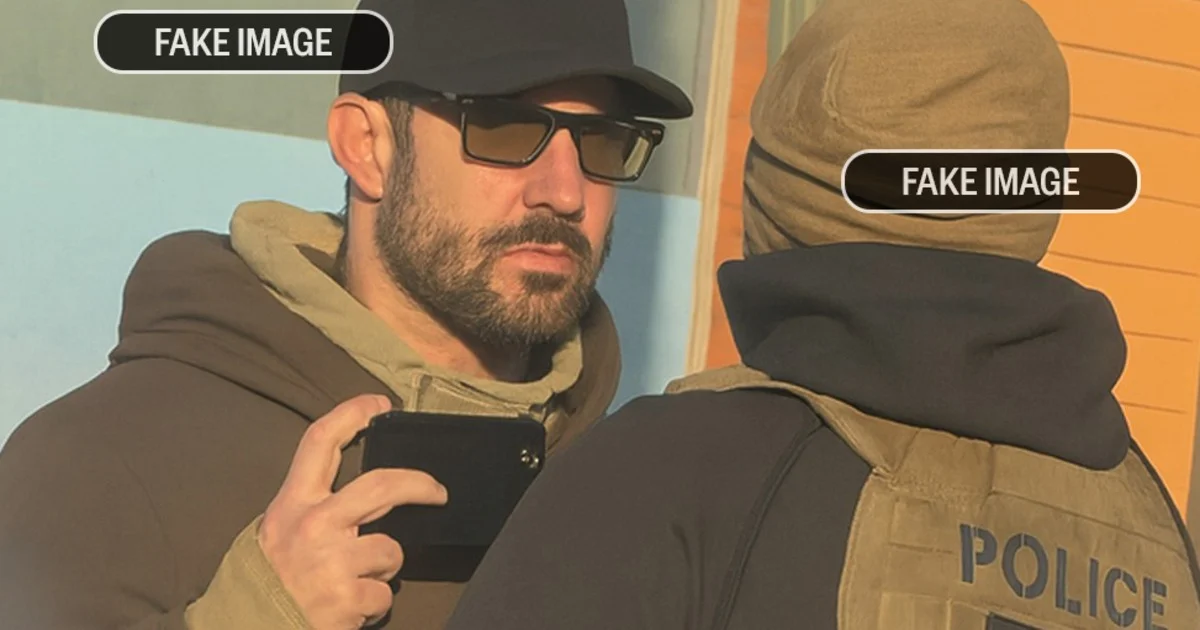

Initial Depictions: Early AI-modified images surfaced around January 27, 2026, aiming to depict Pretti's interaction with ICE officers. Some of these images showed Pretti holding a handgun, a detail contradicted by video evidence which shows him holding a phone before agents intervened.

Official Presentation: The manipulated imagery escalated when Senator Dick Durbin (D-IL) displayed an AI-generated image during a speech on the Senate floor on January 30, 2026. Reports suggest the image was presented without initial verification of its authenticity.

Congressional Use: More recently, on February 10, 2026, Representative Bennie Thompson (MS-2), ranking Democratic member on the House Committee on Homeland Security, shared an AI-generated image during a committee hearing. This marked a further instance of such visuals being used in congressional proceedings.

Examination of Evidence and Authenticity

Multiple sources have investigated and confirmed the artificial nature of these images, while also attempting to trace their origin and impact.

Read More: Amazon Prime Was Faster and Saved People Money in 2025

Fact-Checking Initiatives: Organizations like Politifact have debunked claims that viral images of Alex Pretti were real photographs, confirming they were manipulated using AI tools, specifically Google's Gemini. These fact-checks established that claims of the image being a genuine photograph were false.

Visual Discrepancies: Close examination of some AI-generated images has revealed inconsistencies. For instance, one widely shared image depicting federal agents surrounding Pretti featured a "headless agent" and was identified as an AI enhancement of a low-quality bystander video.

Video Evidence: Available video evidence of the incident reportedly shows Pretti holding his phone, not a handgun, prior to agents taking action and disarming him.

The Role of Social Media and News Outlets

The dissemination of these AI-generated images has been facilitated by various online platforms and amplified by media reporting.

Read More: Nikki Haley Says Many People Don't Feel Hopeful About the Economy

Social Media Sharing: Platforms such as X (formerly Twitter), Facebook, Instagram, and Threads have been used to share the manipulated images.

News Coverage and Corrections: News outlets have reported on the emergence and spread of these images. Some, like MS NOW, have reportedly altered AI-altered images after facing backlash. The Minnesota Star Tribune identified one of the agents involved as Jonathan Ross.

Misinformation Campaigns: Beyond static images, there have been reports of AI-generated videos, including one on TikTok purportedly showing Pretti speaking with an ICE officer and another on Facebook depicting a police officer firing Pretti's gun. It remains unconfirmed if the officer actually fired the weapon. AI-modified images also circulated with the intent to identify the ICE officer who fatally shot Renee Nicole Good, another U.S. citizen.

Expert Commentary and Implications

The proliferation of AI-generated content in sensitive contexts is a subject of growing concern among experts.

Read More: Why Some Programmers Choose Special Languages

"The spate of AI deepfakes has added to swirling misinformation surrounding Pretti’s shooting…"- NBC News

"If Thompson has the facts on his side when presenting his case, he wouldn't have to keep relying on bogus evidence."- RedState

The use of AI-generated images in official settings and their rapid spread through social media pose a significant challenge to public understanding and the integrity of information. The trend indicates a need for enhanced media literacy and verification mechanisms to combat the erosion of trust caused by sophisticated digital manipulation.

Conclusion

The repeated appearance of AI-generated images related to Alex Pretti's incident reveals a discernible pattern of misinformation leveraging advanced technology. These visuals, initially appearing on social media and later presented in congressional hearings, demonstrate the evolving tactics used to spread potentially misleading narratives. Fact-checking efforts have consistently identified these images as artificial, often noting visual anomalies and discrepancies with existing video evidence. The broader implications extend to the challenge of discerning truth in an era of readily accessible AI manipulation tools, underscoring the necessity for vigilance and critical evaluation of digital content, particularly when it intersects with public events and political discourse.

Read More: US Debt Growing Fast, Experts Say It's a Problem

Sources Used:

RedState: Published February 10, 2026. "Now It’s a Pattern: Another Dem Shares Fake AI Image of Alex Pretti Shooting During Committee Hearing." https://redstate.com/rusty-weiss/2026/02/10/now-its-a-pattern-another-dem-shares-fake-ai-image-of-alex-pretti-shooting-during-committee-hearing-n2199003

Context: This article reports on Representative Bennie Thompson's use of an AI-generated image during a House Committee hearing, framing it as a pattern of utilizing fake evidence.

NBC News: Published February 1, 2026. "AI-altered photos and videos of Minneapolis shootings blur reality." https://www.nbcnews.com/tech/tech-news/ai-altered-photos-videos-minneapolis-shootings-blur-reality-rcna256552

Context: This piece discusses the broader issue of AI deepfakes in Minneapolis, referencing Senator Dick Durbin's display of an AI image and its contribution to misinformation surrounding the Alex Pretti shooting.

NewsBreak (via Bing search): "MS NOW changes AI-altered image of Minnesota shooting victim Alex Pretti after backlash." https://www.newsbreak.com/news/4468722590914-ms-now-changes-ai-altered-image-of-minnesota-shooting-victim-alex-pretti-after-backlash

Context: This entry notes that MS NOW updated an AI-altered image related to Alex Pretti following public reaction, highlighting media responses to the use of such imagery.

Politifact: Published January 27, 2026. "This is an AI-manipulated image of Alex Pretti." https://www.politifact.com/factchecks/2026/jan/27/tweets/Image-AI-Minneapolis-Alex-Pretti-Immigration/

Context: This fact-check definitively identifies a viral image of Alex Pretti as being AI-manipulated, refuting claims of its authenticity and comparing it to video evidence.

Star Tribune: Published January 29, 2026. "AI image of Alex Pretti’s killing is the latest altered photo amid ICE surge in Minneapolis." https://www.startribune.com/ai-image-of-alex-prettis-killing-is-the-latest-altered-photo-amid-ice-surge-in-minneapolis/601571325

Context: This article details an AI-generated image depicting Alex Pretti's encounter with ICE, noting visual flaws and identifying an agent involved, while placing it within the context of increased ICE activity.

The Outpost: Published January 30, 2026. "AI-Altered Image of Alex Pretti Shown in US Senate." https://theoutpost.ai/news-story/ai-altered-image-of-alex-pretti-reaches-us-senate-floor-exposing-misinformation-crisis-23447

Context: This report confirms the presence of an AI-altered image of Alex Pretti on the U.S. Senate floor, highlighting its role in a broader misinformation crisis.

Read More: Software Jobs Changing, Not Ending, Because of AI